Generative AI is transforming industries, enabling businesses to automate content creation, enhance customer interactions, and streamline operations. From personalized marketing campaigns to AI-driven chatbots and intelligent document processing, organizations are rapidly integrating Generative AI into their workflows. Amazon Bedrock is a fully managed AWS AI service that enables businesses to build and scale secure and compliant AI applications. However, as adoption grows, so do concerns around Amazon Bedrock security, as businesses must ensure their AI applications are protected from cyber threats, data breaches, and regulatory non-compliance.

Generative AI applications often process sensitive data, making them prime targets for cyber threats, privacy violations, and regulatory scrutiny. Ensuring application security is not just about protecting models and infrastructure but also about adhering to strict data governance policies, mitigating bias, and maintaining transparency in AI decision-making. Businesses that overlook these challenges may face compliance issues, reputational setbacks, and operational inefficiencies.

This is where Amazon Bedrock security plays an important role. By leveraging its robust built-in security measures, AI governance frameworks, and integrations with AWS security services, you can enhance the security of your generative AI applications while maintaining compliance and safeguarding data integrity and privacy.

In this blog, we’ll explore how you can utilize Amazon Bedrock’s security features to mitigate risks, maintain compliance, and build AI applications with confidence. Whether you’re a CTO, DevOps engineer, or compliance officer, understanding these security fundamentals is crucial for leveraging Generative AI responsibly.

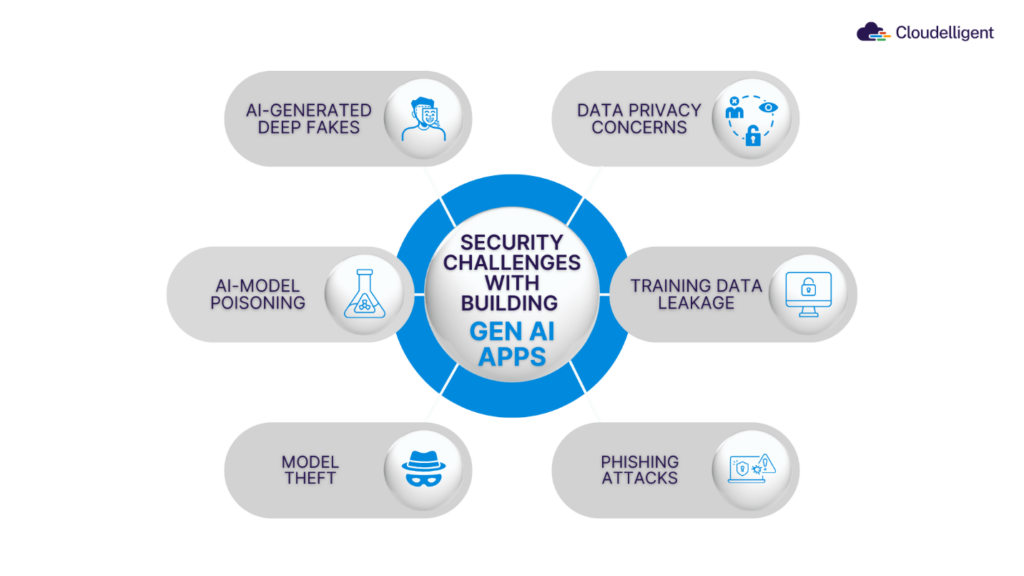

Security and Compliance Challenges with Generative AI Applications

As Generative AI applications become more sophisticated, so do the security and compliance risks they bring. Imagine an AI system accidentally exposing sensitive customer data or being manipulated into generating harmful content. That’s a serious problem—and exactly why Amazon Bedrock security is designed to address these challenges head-on. Let’s break it down.

Figure 1: Security Challenges with Building Generative AI Applications

Data Leakage: When AI Says Too Much

AI models learn from massive datasets, but sometimes they learn a little too well. Data leakage happens when a model unintentionally reveals sensitive or proprietary information in its outputs. Think of an AI chatbot disclosing confidential financial figures or an internal document making its way into the generated text. This can occur during both training and inference, leading to compliance violations and reputational damage.

With Amazon Bedrock security, your business can lock down access controls and encryption to prevent unauthorized data exposure. These safeguards help keep your customer data, trade secrets, and internal documents from slipping through the cracks.

Prompt Injection Attacks

Ever heard of prompt injection? It’s when attackers craft tricky inputs to manipulate an AI model’s responses—essentially tricking it into revealing information it shouldn’t or bypassing safety restrictions. This is especially concerning in AI-driven customer support, content moderation, and financial analysis, where manipulated responses could spread misinformation or cause serious security breaches.

A strong AI application security strategy is key. With Amazon Bedrock security, you can leverage input validation, fine-tuned model constraints, and real-time monitoring to detect and block adversarial prompts before they become a threat.

Staying on the Right Side of Compliance

AI isn’t just about innovation. It has to follow the rules. Generative AI models trained on personal data must comply with privacy regulations, which include managing data access, anonymization, and deletion. Depending on the industry, companies must comply with strict regulations such as:

- GDPR: Protecting user data and ensuring transparency.

- SOC 2: Ensures that AI applications meet strict security, availability, and confidentiality controls for handling customer data.

- HIPAA: Keeping healthcare data secure and private.

- FedRAMP: Meeting security standards for federal agencies.

Falling short on compliance isn’t just risky—it can lead to hefty fines, legal trouble, and operational disruptions. That’s why implementing governance features like access control policies, audit logs, and model explainability are crucial for responsible AI applications.

Why Amazon Bedrock Security is the Answer

With increasing security risks, businesses need a trusted foundation for their AI applications. Amazon Bedrock Security delivers robust encryption, compliance alignment, and continuous monitoring through AWS security services, helping you mitigate threats and maintain regulatory compliance.

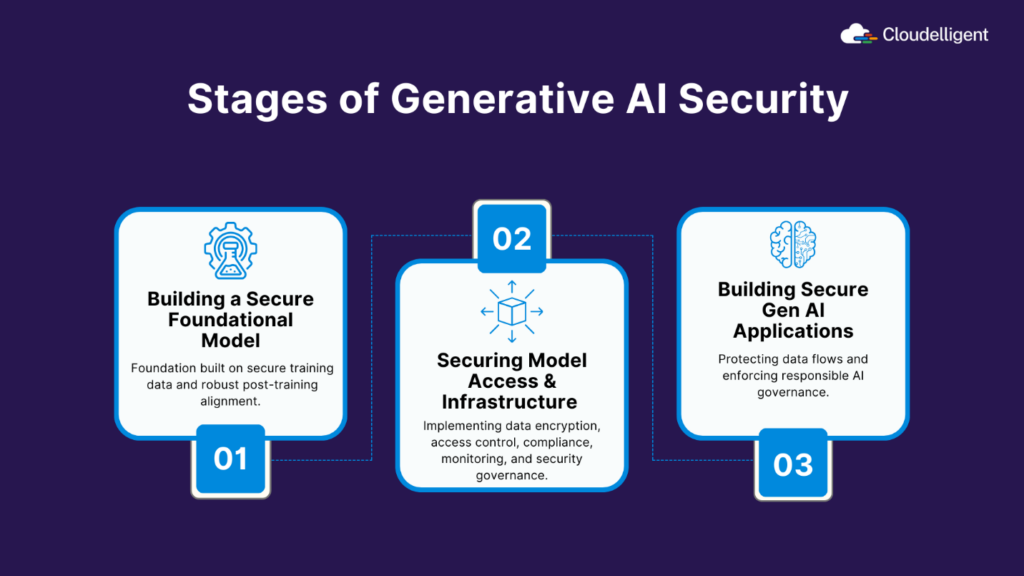

Inside Amazon Bedrock’s Security Architecture

Amazon Bedrock’s security architecture is designed with a layered approach to address the unique security challenges of Generative AI at each stage of the application lifecycle. This comprehensive strategy ensures the protection of your data, models, and applications. We can break down Bedrock’s security into three key stages:

Figure 2: Stages of Generative AI Security

1. Building a Secure Foundational Model

Amazon Bedrock ensures a secure foundation for generative AI models by incorporating security best practices throughout the model development lifecycle. This includes using high-quality, well-curated training data and applying robust post-training alignment techniques to enhance safety, compliance, and reliability.

How Amazon Bedrock Secures Foundation Models

- Secure Training Data: Models are trained on vetted datasets to minimize risks related to biased or harmful outputs. It’s important to note that AWS does not use your business data to train the foundation models on Amazon Bedrock.

- Post-Training Alignment: Continuous refinement ensures model responses adhere to ethical guidelines and compliance standards.

- Data Privacy Protections: Built-in mechanisms prevent unauthorized access and ensure data confidentiality.

- Robust Compliance Framework: Adheres to industry security and compliance standards to support regulatory requirements. With these measures, Amazon Bedrock provides a strong security foundation, enabling organizations to deploy AI models with confidence. However, customers remain responsible for managing access policies and model usage.

You can read our blog Mastering Cloud Security: Your Essential Guide to the AWS Shared Responsibility Model in 2025 for a detailed breakdown of AWS and customer security responsibilities.

2. Securing Model Access and Infrastructure

Protecting your AI applications means securing access and infrastructure at every level. Amazon Bedrock provides security tools and infrastructure, while you must configure them properly to prevent unauthorized access.

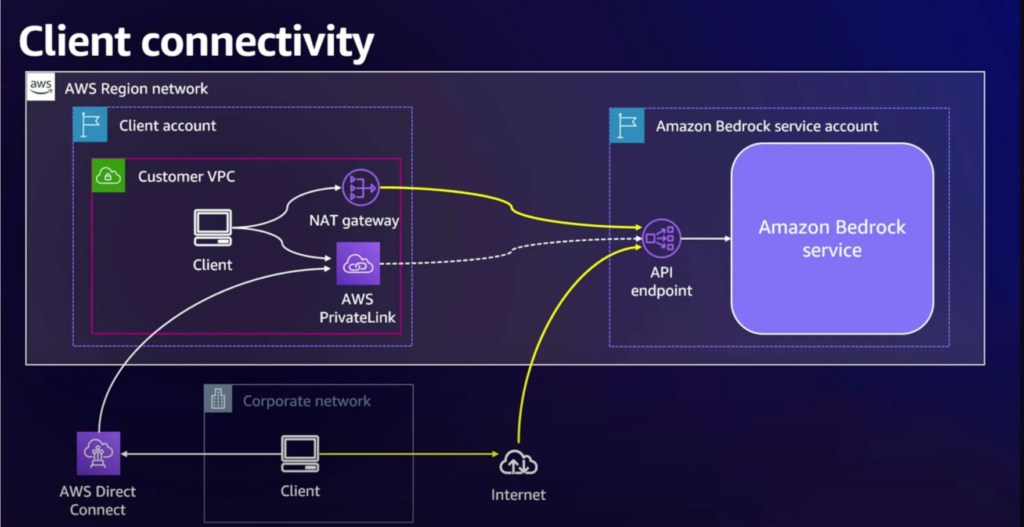

Figure 3: Secure Connectivity to Amazon Bedrock (community.aws)

Key Security Responsibilities of the Customer

- Access Control: Implement IAM-based authentication mechanisms to control user and application access.

- Role and Policy Management: Define IAM roles and policies to restrict API calls and enforce least privilege access.

- Network Security: Set up VPC and AWS PrivateLink to ensure network isolation and secure communication.

- Traffic Control: Configure network access controls to limit exposure and secure AI workloads.

- Data Protection: Enable encryption for data at rest and in transit to protect sensitive information.

- Threat Detection and Auditing: Enable Amazon GuardDuty for threat detection and Amazon CloudTrail for audit logging.

By leveraging AWS security features and properly configuring access controls, you can strengthen your AI security posture.

Want to explore automation in AI security? To learn how Bedrock Agents streamline compliance and security operations check out our blog Queries to Actions: How Amazon Bedrock Agents Are Revolutionizing Automation.

3. Building Secure Generative AI Applications

Developing secure Generative AI applications requires protecting data flows and enforcing responsible AI governance. While Amazon Bedrock provides security tools and frameworks, it is your responsibility to implement the right controls to safeguard your AI applications.

Key Security Responsibilities of the Customer

- Secure Data Handling: Implement stringent data access controls and encryption mechanisms to protect sensitive data throughout the application lifecycle.

- Responsible AI Governance: Establish clear policies and procedures for AI usage, ensuring ethical and compliant application behavior.

- Input Validation and Sanitization: Protect against prompt injection and other vulnerabilities by rigorously validating and sanitizing user inputs.

- Output Monitoring and Filtering: Implement mechanisms to monitor and filter AI-generated outputs, preventing the dissemination of harmful or inappropriate content.

- Access Control and Authentication: Utilize strong authentication and authorization mechanisms to restrict access to your generative AI applications and data.

By combining Amazon Bedrock security features with customer-driven security controls, organizations can confidently build, deploy, and scale AI applications while maintaining compliance and data integrity.

Best Practices to Build Secure Generative AI Applications on Amazon Bedrock

Amazon Bedrock provides a secure and compliant platform for building and deploying Generative AI applications. However, security is a shared responsibility, and organizations must implement additional security measures to protect sensitive data, enforce compliance, and mitigate threats. The following best practices help enhance security and governance for your AI applications built on Amazon Bedrock.

1. Implement Fine-Grained Access Control with AWS Identity and Access Management (IAM)

Security starts with controlling who can access your AI models and what they can do. AWS Identity and Access Management (IAM) lets you define strict permissions so that only authorized users and applications can interact with Amazon Bedrock.

Best Practices for IAM Security

- Define IAM Role Policies

- Restrict who can invoke models and the actions they can perform.

- Set permissions for listing, describing, invoking, or fine-tuning models.

- Apply least privilege access to limit unauthorized use and data exposure.

- Use AWS Organizations and SCPs (Service Control Policies)

- Manage security policies across multiple AWS accounts.

- Restrict non-compliant AI operations at an organizational level.

- Enforce security baselines for all Amazon Bedrock environments.

These access controls ensure that only the right people can interact with your AI models, reducing security risks.

2. Leverage AWS PrivateLink for Secure AI Communication

You can integrate AWS PrivateLink with other AI-related services to minimize exposure to the public internet while using IAM and encryption to securely access foundation models within AWS’s network.

Best Practices for Secure AI Communication

- Use encryption in transit (TLS) and IAM access controls to secure inference requests within your AWS environment.

- Minimize exposure by applying IAM-based restrictions and configuring VPC security controls where applicable.

- Secure communication between VPCs using AWS PrivateLink for related AI services (e.g., data storage, API integrations) to maintain network isolation and comply with security policies.

By leveraging AWS security tools, including PrivateLink, you can minimize exposure to external networks and enhance the overall security of your AI applications in Amazon Bedrock.

3. Enable Monitoring and Logging for AI Applications

Effective AI governance requires continuous monitoring and auditing of AI applications. You can integrate Amazon Bedrock with AWS CloudTrail and CloudWatch to track API calls, monitor inference requests, and gain real-time visibility into AI application activity.

Best Practices for Monitoring and Logging

- Use AWS CloudTrail for Auditing

- Log all API activity related to Amazon Bedrock models.

- Enable security teams to review access patterns and detect anomalies.

- Set Up CloudWatch for Performance Monitoring

- Track model performance, response times, and usage statistics.

- Identify unusual behaviors or latency issues that may indicate potential security threats.

- Leverage AWS Security Hub for Anomaly Detection

- Aggregate security findings from Amazon GuardDuty, AWS Config, and AWS CloudTrail.

- Identify misconfigurations and unauthorized activities to improve compliance and security posture.

With these tools, you can enhance visibility into your Generative AI applications, ensuring compliance and preventing security incidents.

4. Apply End-to-End Encryption and Data Privacy Controls

Protecting AI data is a top priority. With Amazon Bedrock, you must implement encryption and privacy controls to ensure secure AI interactions and maintain data confidentiality throughout its lifecycle.

Best Practices for Data Encryption and Privacy

- Encrypt Data in Transit using TLS 1.2 (minimum) to secure communication.

- Encrypt fine-tuned models and associated metadata with AWS Key Management Service (KMS).

- Use customer-managed KMS keys for fine-tuned models to maintain full encryption control.

Amazon Bedrock’s Data Privacy Policy

- Customer data is not used to train foundation models.

- Fine-tuned models are logically isolated per customer, reducing the risk of cross-tenant data exposure.

- It’s best practice to select AWS Regions that align with your compliance and data residency requirements.

By implementing these security measures, organizations can ensure confidentiality, integrity, and compliance when deploying Generative AI applications.

5. Meet Global Compliance Standards

Security isn’t just about threat prevention—it’s also about ensuring your Generative AI applications comply with industry regulations. Amazon Bedrock is built on AWS’s secure infrastructure, which adheres to global security and privacy standards. However, you are responsible for configuring security controls to meet your organization’s specific compliance needs.

Best Practices for Compliance

- Utilize AWS’s compliance programs, including ISO 27001, SOC 2, HIPAA, and GDPR, to align with regulatory requirements.

- Define where AI data is processed and stored to comply with regional data protection laws.

- Use IAM policies, AWS CloudTrail, and AWS Config to monitor and document compliance-related activities.

- Perform internal audits and leverage AWS Audit Manager to evaluate compliance posture.

By following these best practices, you can ensure that your generative AI applications meet industry regulations while maintaining security and data integrity.

6. Secure Model Customization and Fine-Tuning

Fine-tuning generative AI models introduces unique security challenges. Amazon Bedrock ensures that customized models remain private and are not used to train AWS foundation models.

Best Practices for Model Customization Security

- Keep fine-tuned models private to prevent unauthorized access.

- Store fine-tuned models securely in Amazon S3 with AWS KMS encryption to protect sensitive information.

- Regularly review and rotate encryption keys to maintain data security and prevent unauthorized.

- Use IAM policies to control access and restrict who can fine-tune or invoke custom models.

By following these security best practices, you can ensure that model customization does not compromise data privacy or expose sensitive business information.

Develop Secure Generative AI Applications with Cloudelligent

Security and compliance shouldn’t be barriers to innovation. They should be the foundation of your AI strategy. At Cloudelligent, we help businesses harness the power of Amazon Bedrock while ensuring robust security, governance, and compliance every step of the way. With our deep AWS expertise, you can confidently build and scale Generative AI applications without sacrificing security or regulatory compliance.

Here’s how we empower your AI journey:

- Fortify Amazon Bedrock security with AWS best practices to protect AI models and data.

- Ensure compliance with industry regulations through governance-driven security controls.

- Scale AI solutions securely without sacrificing performance or protection.

Ready to secure your AI-powered solutions? Partner with Cloudelligent today to build and deploy secure, compliant, and high-performance AI applications on Amazon Bedrock. Take advantage of our FREE AI and Machine Learning Assessment to evaluate your security posture and compliance needs.