“Why choose between A or B when you can have both?” This question from Matt Garman’s first re:Invent keynote as AWS CEO perfectly captured AWS’s approach to innovation. Garman rejected the “Tyranny of the OR” thinking that often limits technology choices, championing instead the “Genius of the AND” – AWS’s commitment to delivering solutions where customers don’t have to compromise.

Figure 1: Matt Garman taking center stage at AWS re:Invent 2024

This mindset was evident throughout the keynote as AWS unveiled innovations that push traditional boundaries. Want AI acceleration that’s both powerful and cost-efficient? Need databases that are globally consistent without sacrificing speed? AWS delivered on these seemingly contradictory goals and more. The message was clear: in the AWS ecosystem, you no longer have to choose between competing priorities.

Let’s take a closer look at the key services and technologies that stood out at AWS re:Invent 2024.

Compute

AWS continues to redefine compute with innovations that deliver more power, efficiency, and scalability for all kinds of workloads.

Graviton4

AWS’s latest custom processor marks a major leap in performance and efficiency. Graviton4 delivers 30% more compute power per core and triples the memory compared to Graviton3. Pinterest showcases its real-world impact: 47% reduction in compute costs and 62% lower carbon emissions after migration. “Let’s put this into context,” Garman shared. “In 2019, all of AWS was a $35 billion business. Today, there’s as much Graviton running in the AWS fleet as all compute in 2019.”

Amazon EC2 Trainium2 Instances

Now generally available, Trainium2 brings AI acceleration to a new level with 30-40% better price performance than current GPU instances. Each instance packs 16 Trainium2 chips connected through high-bandwidth, low-latency Neuralink, delivering 20.8 petaflops of compute from a single node. “These are purpose-built for the demanding workloads of cutting-edge generative AI training and inference,” Garman emphasized.

Amazon EC2 Trainium2 Ultra Servers

For organizations training massive AI models, Trainium2 Ultra Servers combine 64 Trainium2 chips to deliver over 83 petaflops in a single node. Anthropic’s Project Rainier showcases this power, using these servers to build a cluster with five times the compute of their current Claude models’ training infrastructure.

Trainium3

Coming in 2025, Trainium3 represents AWS’s first chip built on 3-nanometer process technology. It promises double the compute power of Trainium2 while improving efficiency by 40%, demonstrating AWS’s commitment to pushing the boundaries of AI infrastructure.

Storage

Managing and analyzing data gets easier with storage solutions that focus on speed, scalability, and simplicity.

Amazon S3 Tables

Amazon S3 Tables transforms data analytics with storage optimized specifically for Apache Iceberg tables. The results are impressive: 3x faster query performance and 10x higher transactions per second compared to general-purpose S3 buckets. It automatically handles table maintenance, compaction, and snapshot management which eliminates the operational overhead traditionally associated with managing analytical data stores.

Amazon S3 Metadata

Finding specific objects among billions just got easier with Amazon S3 Metadata. This new capability automatically captures and indexes metadata when objects are added or modified, making them queryable within minutes. It integrates seamlessly with analytics tools like Amazon Athena, Redshift, and QuickSight, enabling efficient data discovery without building custom metadata management systems.

Amazon FSx Intelligent-Tiering

Amazon FSx Intelligent Tiering is here to revolutionize storage for NAS data sets. With automatic tiering across frequently accessed, infrequent, and archival storage, it delivers exceptional performance with up to 400K IOPS and 20 GB per second throughput, all seamlessly integrated with AWS services.

What is even better? This new storage class is priced 85% lower than the current SSD storage class and 20% lower than traditional on-premises HDD deployments. Plus, you get full elasticity and intelligent tiering to optimize your storage costs and performance like never before.

Databases

AWS databases combine global scalability with high performance, ensuring your data works as fast as your business grows.

DynamoDB Multi-Region Strong Consistency

DynamoDB’s global tables now support strong consistency across regions without compromising latency. This enhancement eliminates a long-standing trade-off in distributed databases, enabling global applications to maintain data consistency while delivering fast local performance.

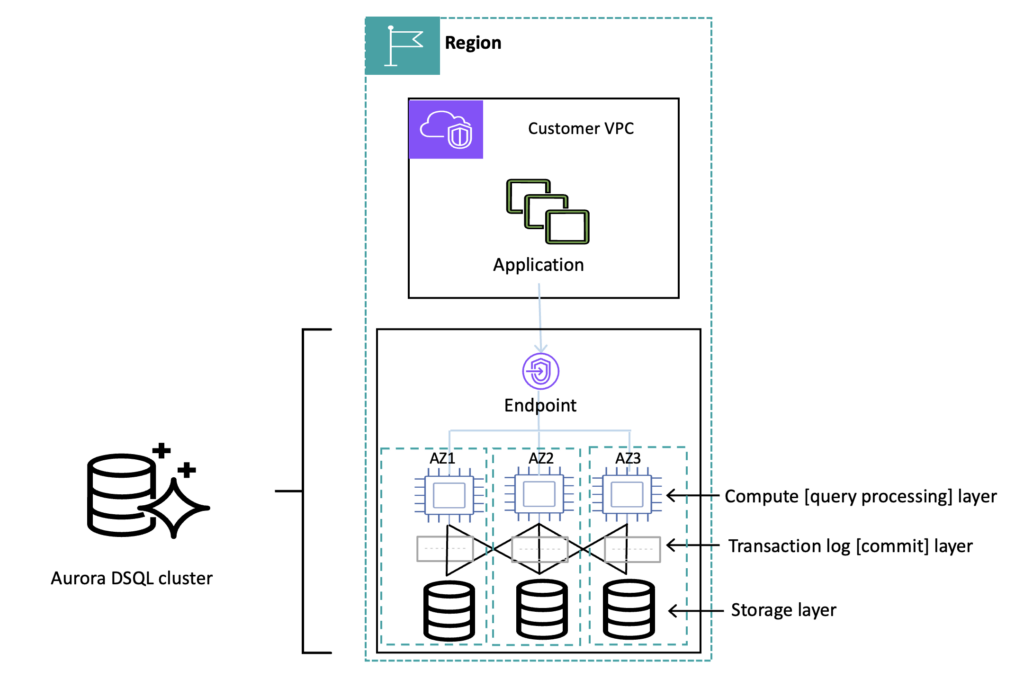

Amazon Aurora DSQL

Breaking new ground in distributed databases, Amazon Aurora DSQL delivers what many thought impossible: strong consistency with low latency across regions. “We separated the transaction processing from the storage layer,” Garman explained. The result is a distributed SQL database that performs 4x faster than Google Spanner while maintaining five-nines availability and serverless scalability.

Figure 2: Aurora DSQL’s High Available Cluster Topology (Source: aws.amazon.com)

Generative AI and Machine Learning

AI and ML innovations at AWS are making it possible to solve complex challenges and build smarter systems across industries.

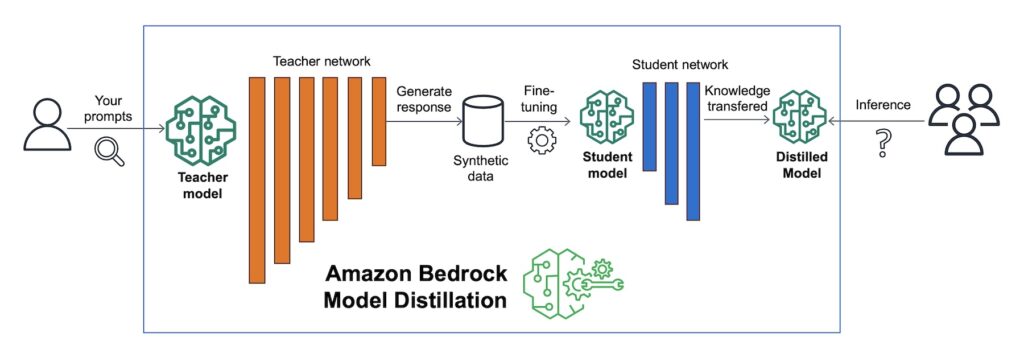

Amazon Bedrock Model Distillation

Amazon Bedrock Model Distillation transforms how organizations deploy AI models. It enables knowledge transfer from large to smaller models, resulting in up to 500% faster performance at 75% lower cost. The process is automated – simply provide your prompts, and Bedrock handles the complexity of creating efficient, specialized models.

Figure 3: Amazon Bedrock Model Distillation Process (Source: aws.amazon.com)

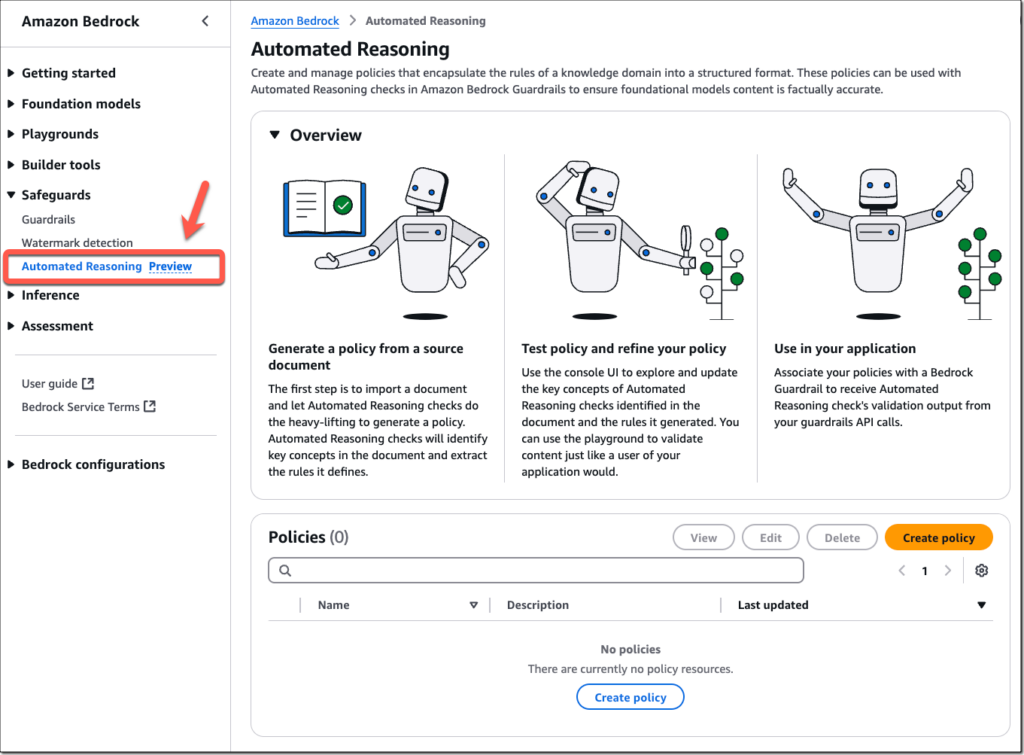

Amazon Bedrock Automated Reasoning

A breakthrough in AI reliability, Automated Reasoning mathematically verifies model outputs to prevent hallucinations. “When somebody’s asking about insurance coverage, you need to get that right,” Garman emphasized. The system ensures factual accuracy in high-stakes scenarios by validating responses against verified knowledge bases.

Figure 4: Automated Reasoning (Preview) (Source: aws.amazon.com)

Amazon Bedrock Multi-Agent Collaboration

Multi-Agent Collaboration introduces a new paradigm in AI orchestration. A supervisor agent coordinates specialized agents handling various aspects of complex tasks. Garman demonstrated this with a real estate analysis example where different agents analyze economic factors, market dynamics, and financial projections, while the supervisor ensures optimal collaboration.

Amazon Nova Models

AWS’s entry into Nova foundation models brings a comprehensive suite:

- Amazon Nova Micro: A text-only model optimized for ultra-low latency and minimal cost.

- Amazon Nova Lite: An affordable multimodal model offering lightning-fast processing for text, image, and video inputs.

- Amazon Nova Pro: A versatile multimodal model that balances top-tier accuracy, speed, and cost for diverse use cases.

- Amazon Nova Premier: The most capable of Amazon’s multimodal models for complex reasoning tasks.

- Amazon Nova Canvas: A cutting-edge model designed for advanced image generation.

- Amazon Nova Reel: A state-of-the-art model specializing in video generation.

Looking ahead to 2025, AWS plans to introduce speech-to-speech and multimodal-to-multimodal processing, further expanding the potential of foundation models in AI innovation.

Developer Tools

From building apps to modernizing systems, AWS tools empower developers to work faster, smarter, and more efficiently.

Amazon Q Developer Transformation

Amazon Q Developer introduces specialized solutions for .NET, VMware, and mainframe workloads which offer unprecedented speed and cost efficiency.

- For .NET Applications: Amazon Q Developer accelerates the migration from Windows to Linux, delivering transformations 4x faster while enabling parallel processing of hundreds of applications. This approach reduces modernization costs by 40% and is now available in preview.

- For VMware Workloads: By identifying dependencies and transforming network configurations, Amazon Q Developer simplifies VMware modernization. It also enables agents to convert on-premises code to cloud-ready equivalents. This is now available in preview.

- For Mainframes: Amazon Q Developer expedites legacy mainframe migrations with automated code analysis, refactoring, and documentation creation—ideal for building a cloud-ready architecture. This is also available in preview.

As Garman noted, “What previously took weeks or months can now be done in minutes.”

Amazon Q Developer Agents

Three new autonomous agents join the Q Developer family: unit test generation, documentation creation, and code review automation. A new GitLab integration brings these capabilities directly into developers’ workflows, complementing existing integrations with Slack, AWS Console, and VSCode.

Amazon Q Developer for Operations

This new offering helps teams investigate AWS environment issues in record time. Guided workflows analyze Amazon CloudWatch and AWS CloudTrail logs, detect anomalies like missing IAM permissions, and suggest remediations based on runbooks and documentation.

Business Intelligence

AWS is transforming how businesses make decisions by connecting data and analytics for clearer and more actionable insights.

Amazon QuickSight and Amazon Q Business Unstructured Data

AWS elevates traditional Business Intelligence (BI) tools with the seamless integration of unstructured data into Amazon QuickSight. This capability bridges the gap between siloed data and actionable insights, allowing organizations to harness the power of real-time analytics and data visualization. Garman emphasized that the combination of Amazon Q Business Data and QuickSight goes beyond standard BI, which would enable faster, deeper, and more intelligent decision-making processes.

ISV Integration with Amazon Q Index

The Amazon Q Index for software providers (ISVs) introduces a powerful new way for developers to access and manage data across multiple applications through a unified API. This centralized, single-index approach ensures enhanced security and granular control over permissions, streamlining data governance. Now generally available, integration offers independent software vendors (ISVs) the tools to securely manage data from diverse sources. This will foster collaboration and operational efficiency across enterprise ecosystems.

Amazon Q Business for Automating Complex Workflows

AWS is pushing workflow automation to the next level with Amazon Q Business which can help to simplify and streamline complex enterprise processes. This feature will soon leverage AI to automate workflow creation from documentation or recorded processes, reducing manual effort and improving adaptability to real-time changes.

With over 50 enterprise-ready actions, it enables seamless execution of tasks across tools like ServiceNow, PagerDuty, and Asana. Garman highlighted the potential for organizations to achieve unparalleled efficiency by integrating AI-driven automation into their operational workflows.

Analytics

Simplified, unified, and scalable AWS analytics tools are built to make sense of even the most complex data.

Amazon SageMaker Unified Studio

The next generation of SageMaker unifies data, analytics, and AI development in a single interface. “We’re taking the SageMaker that you all know and love and expanding it,” Garman explained. The platform, now in preview, consolidates functionality across analytics and AI tools, offering integrated data catalog and governance controls.

Amazon SageMaker Lakehouse

AWS simplifies analytics and AI with the SageMaker Lakehouse, an open, unified, and secure data lakehouse solution. Built to unify access to data across S3, Redshift, SaaS, and federated sources, the lakehouse supports fine-grained access control for robust data governance. Apache Iceberg compatibility ensures seamless integration with third-party tools and APIs, giving organizations the flexibility to work with their preferred platforms. SageMaker Lakehouse is now generally available, enabling scalable and secure data analytics for enterprises of all sizes.

Zero Extract, Transform, and Load (ETL) for Applications

This capability eliminates the need for custom data pipelines between AWS services and popular SaaS applications. Now generally available, it enables seamless data flow between Aurora, RDS, DynamoDB, Redshift, and third-party applications while maintaining security and governance controls.

AWS and Cloudelligent’s Shared Vision of the ‘AND’

AWS’s latest innovations represent a bold new approach to solving challenges without compromise. As Matt Garman emphasized in his keynote, the “Tyranny of the OR” forces organizations to make trade-offs—choosing speed over security, cost-efficiency over performance, or innovation over reliability. With AWS’s advancements, such as the Trainium 2 Ultra servers enabling next-gen generative AI at scale, Amazon Q Developer automating modernization workflows, and Zero ETL eliminating complex data pipelines, the “Genius of the AND” is a reality. These innovations empower businesses to achieve exceptional performance, scalability, and efficiency—all at once.

At Cloudelligent, we are proud to align with AWS’s vision of the ‘AND’. Our commitment to customer obsession drives us to deliver solutions that eliminate trade-offs.

Join us at AWS re:Invent 2024 in Las Vegas to explore how these groundbreaking advancements can reshape your business. Together, we’ll unlock the potential of the ‘AND’ to build the future without limits.

Let’s connect, talk through your unique challenges, and discover how we can work together to unlock the next level of innovation for your business.