The era of data-driven decisions is upon us. With data volumes skyrocketing and complexity multiplying, the journey to modernize legacy systems and unlock the full potential of data can be daunting. In today’s world, where insights power every critical decision, data modernization has become the cornerstone of business transformation. This process involves upgrading an organization’s data infrastructure, governance, and management practices to meet the demands of modern analytics and operations.

However, managing large datasets—scaling from terabytes to petabytes—remains one of the most significant challenges. Without the right strategies, these massive volumes of data can overwhelm existing systems and create bottlenecks in efficiency and innovation. In this blog, we’ll explore how AWS tools and strategies can help organizations tackle these hurdles head-on, ensuring a smooth, scalable, and efficient path to data modernization.

Curious about how to unlock the full potential of your data? Check out our blog on Optimizing Your Strategy with the AWS Data Acceleration Program (DAP).

Demystifying Data Modernization

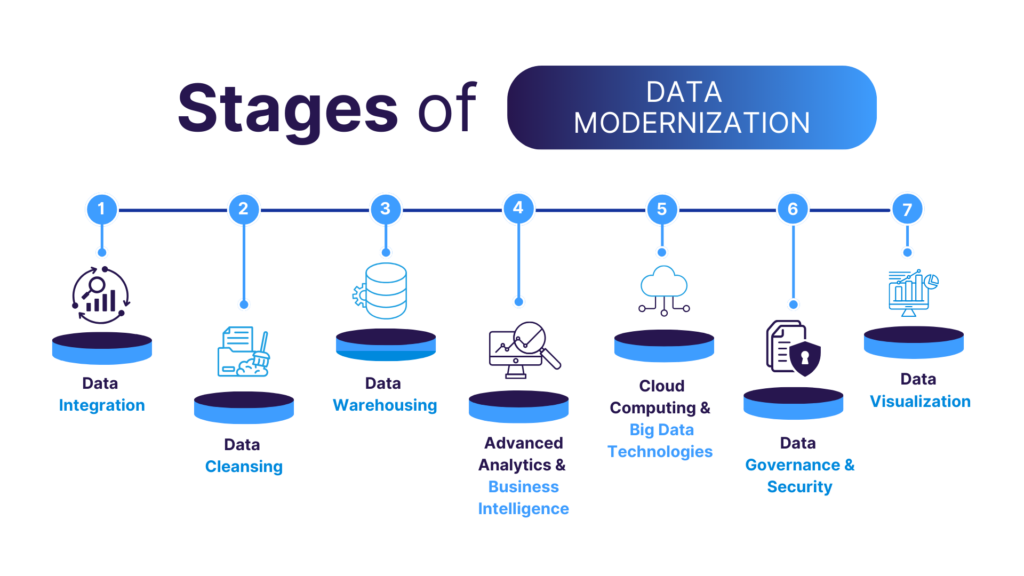

Data modernization goes beyond simply upgrading systems. Its about empowering organizations to harness data for driving innovation and making smarter decisions. This transformation requires reimagining legacy systems and processes to build an agile, scalable, and efficient data ecosystem. By breaking down the complexities, businesses can navigate this transformation with confidence and align their data strategies with modern demands.

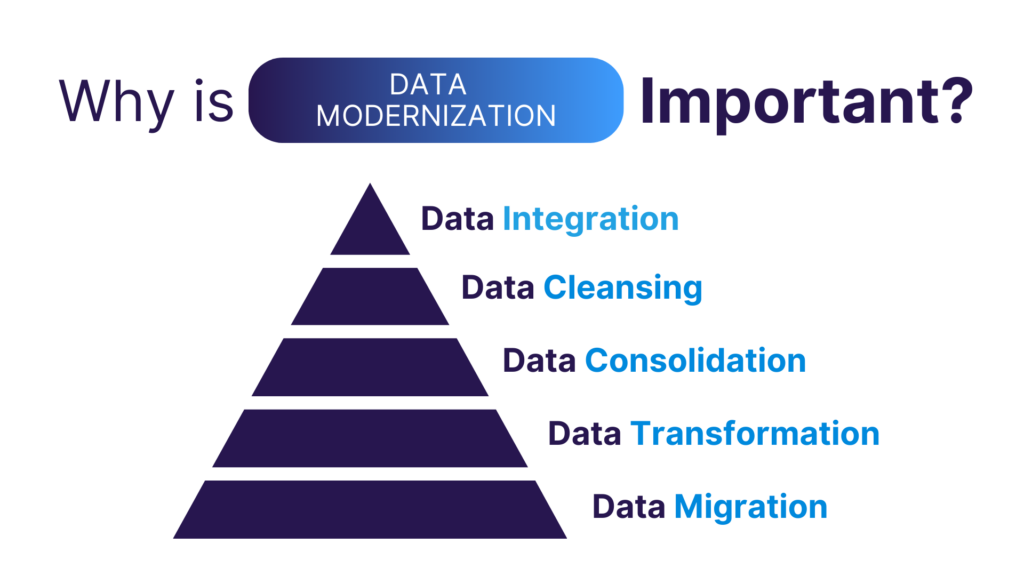

Figure 1: Stages of Data Modernization

Why Modernize Data?

Outdated infrastructure and large data volumes are major challenges for organizations seeking to stay competitive. Legacy systems often struggle to efficiently process massive datasets, resulting in operational bottlenecks and delayed insights. This can hinder timely decision-making and stifle innovation.

Data modernization resolves these issues by upgrading infrastructure and practices. It involves moving data from outdated, siloed databases to cloud-based platforms, and improving data analytics with cloud data lakes and warehouses. However, there are key challenges to overcome in the data modernization process, some of which are discussed in the next section.

Figure 2: Why is Data Modernization Important?

Want to build a rock-solid foundation for your SaaS business? Download our eBook and let us guide you through modernizing your AWS application architecture.

Challenges in Data Modernization

Large datasets are just one of the challenges associated with data modernization. Here are a few more key issues that need to be addressed:

- Legacy System Integration: Modernizing data often requires integrating with older, incompatible systems. This can lead to interoperability issues, data inconsistencies, and disruptions in ongoing operations.

- Skill Shortages: Data modernization demands expertise in emerging technologies like cloud databases, AI/ML, and containerization. The shortage of skilled professionals in the field of big data can slow down progress and complicate the implementation process.

- Data Security and Compliance: Ensuring robust security and compliance during the modernization process is essential, especially when handling sensitive data. This includes implementing encryption, and access controls, and maintaining regulatory compliance across modern and legacy systems.

- Data Quality Management: Migrating large volumes of data increases the risk of errors and inconsistencies. Data quality during migration and transformation is crucial to avoiding poor decision-making or unreliable insights.

Navigating Data Modernization with AWS Solutions

Embarking on a large-scale data modernization journey can be a daunting task, fraught with complexities and potential pitfalls. However, AWS offers a comprehensive suite of services designed to streamline this process and unlock the full potential of your data. Let’s explore how AWS can help you modernize your data infrastructure, overcome common challenges, and accelerate your data modernization initiatives.

1. Data Migration

The data modernization journey begins with the data discovery process, where the required datasets are identified, and their migration order is determined. This crucial step lays the foundation for a smooth and efficient migration. AWS provides powerful services to streamline the process of migrating your data to the cloud:

- AWS Snowball Edge accelerates data migration by securely transferring large datasets offline to an Amazon S3 bucket for staging.

- For Amazon DynamoDB, large objects can be efficiently stored in S3, with pointers maintained in DynamoDB, streamlining data handling and transfer.

- AWS Database Migration Service (DMS) supports ongoing replication of changes during migration, ensuring minimal downtime and data consistency.

- AWS Schema Conversion Tool (SCT) simplifies the transition between database engines by converting code and schema, ensuring compatibility and high availability.

After staging the data in Amazon S3, the database backup is imported into the chosen purpose-built AWS database, ready to support modern applications.

Want to ensure your data stays secure? Check out our blog for the Top 5 Amazon S3 Security Strategies and learn how to implement them effectively!

2. Data Modernization

Unleashing the full potential of your data requires a modern data architecture that enables seamless data transfer between purpose-built services, such as scalable data lakes. AWS offers a suite of services to streamline this process:

- AWS Glue helps securely build and manage end-to-end data pipelines, ensuring seamless data transfers across different environments.

- Amazon Q in QuickSight enhances this data by incorporating AI/ML capabilities, enabling the creation of advanced visualizations and scenarios for deeper insights.

- Additionally, AWS AI/ML services leverage machine learning, artificial intelligence, and generative AI to transform your data, driving innovation and unlocking new opportunities.

This large-scale data modernization empowers businesses to leverage ML, AI, and Generative AI, gaining a competitive edge and fostering innovation.

Learn how Generative BI with Amazon Q in QuickSight can revolutionize your data strategy, providing instant, actionable insights that drive smarter, faster decision-making across your organization!

3. Data Processing

Data processing is a crucial aspect of data modernization, where raw data is transformed into actionable insights. This involves using various AWS services to efficiently process, cleanse, and prepare data for analysis. You can leverage a suite of services to simplify and accelerate data processing, ensuring efficiency and scalability.

- AWS Glue can help you streamline and speed up data transformation tasks with features such as built-in machine learning for data cleansing and automated data preparation, significantly reducing time and effort.

- Amazon EMR can enable you to conduct petabyte-scale data processing and achieve interactive analytics. Amazon EC2, Amazon EKS, or serverless, provide compute and storage architecture for dynamic scaling of resources. This helps to modernize legacy Hadoop platforms by migrating them to the cloud, enhancing efficiency, scalability, and insights for better decision-making and a competitive edge.

- By leveraging AWS Fargate you can migrate on-premises containerized data pipelines to AWS, taking advantage of its elastic scaling to automatically provision compute resources as data volume grows.

4. Data Orchestration

As organizations scale, traditional systems struggle to handle increasing data volumes, speed, and variety. This results in performance issues and high costs for hardware, software, and maintenance.

Modern data orchestration tools offer more efficient, flexible, and scalable solutions. These tools streamline workflow management, ensuring smooth task scheduling, dependency handling, and data consistency.

AWS provides services to optimize and manage data workflows:

- AWS Glue Workflows manage data ingestion and transformation, integrating with AWS Glue DataBrew for data cleansing and conversion, before storing it in Amazon S3.

- Amazon Managed Workflows for Apache Airflow (MWAA) orchestrates complex data pipelines, utilizing Apache Spark on Amazon EMR for large-scale data processing, such as annual reporting.

- Amazon Step Functions coordinates these workflows, using Standard Workflows for long-running tasks and Express Workflows for real-time, low-latency data processing.

5. Data Storage

The rapid growth in data volume creates the need for scalable storage solutions. They can handle large datasets efficiently while keeping costs manageable. Here’s how AWS can help:

- Amazon S3 is an ideal solution for staging large database files, offering highly durable and scalable storage.

- S3 Intelligent Tiering automatically moves data between storage classes based on access patterns, ensuring the most cost-effective storage tier is used.

- Compression and Deduplication reduce the storage footprint by eliminating redundant data and compressing files, helping to save on storage costs.

6. Data Security and Compliance

Protecting your data across various endpoints and cloud environments while ensuring compliance with regulatory requirements can be a complex task. AWS provides a range of tools and services designed to simplify this process:

- AWS Key Management Service (KMS) enables robust encryption to secure your data, while Identity and Access Management (IAM) offers precise control over who can access it.

- Amazon Macie, powered by machine learning, helps identify and protect sensitive information, such as personal data or financial records.

- Compliance frameworks, including HIPAA, GDPR, and PCI-DSS, are supported to help organizations meet critical regulatory standards and maintain trust with their customers.

Learn more about how Amazon Macie’s advanced capabilities can strengthen your data protection strategy in our blog – Fortifying Healthcare Data Security With ML-Powered Amazon Macie.

7. Data Synchronization and Real-Time Access

Ensuring real-time data synchronization across multiple locations is vital for maintaining data consistency and enabling timely, accurate insights. Without proper synchronization, organizations risk data discrepancies that can hinder decision-making and operational efficiency. AWS offers solutions to ensure real-time data synchronization across multiple locations, enabling consistency and timely insights.

- AWS Database Migration Service (DMS) enables continuous data replication, ensuring synchronized data across different environments. This helps maintain consistency and reduces the risk of errors in distributed systems.

- Amazon Aurora Global Database provides low-latency access to globally distributed data, allowing users across regions to access the same consistent information quickly and efficiently.

- AWS AppFlow and Amazon EventBridge support real-time integration by enabling seamless data flow between applications and systems, ensuring up-to-date insights across distributed environments.

8. Skills Gap in Big Data Management

Managing complex big data analytics and operations is increasingly challenging for organizations, especially with a growing shortage of skilled professionals in this domain. The intricate nature of tasks like data preparation, warehousing, and processing often demands specialized expertise, making it difficult for teams to keep pace with the rapid evolution of technologies. This skills gap can hinder efficient data management and delay valuable insights critical for decision-making.

AWS provides comprehensive solutions to bridge this gap effectively:

- Managed services like Amazon Redshift for data warehousing, AWS Glue for data preparation, and Amazon EMR for big data processing to simplify and automate complex processes. By handling these operations seamlessly, AWS reduces the dependence on in-house expertise, allowing teams to focus on extracting insights from their data.

- AWS Training and Certification programs offer upskilling opportunities, helping professionals gain expertise in data analytics and cloud architecture. These programs empower teams to confidently manage modern data solutions and stay ahead in the competitive landscape.

Cloudelligent’s Real-Life Data Modernization Success

Cloudelligent worked closely with a client to modernize their data infrastructure, making it more efficient and scalable. Here’s how we helped:

- Containerization: Migrated about 170 websites to Amazon Elastic Container Service (ECS) for reliable container management and 100% uptime.

- Database Optimization: We set up Amazon Relational Database Service (RDS) as the backend database for smooth data management.

- Scalable Storage: Leveraged Amazon Elastic File System (EFS) for persistent, shared storage to improve data accessibility.

- Robust Backup and Recovery: We established backup policies with Clumio for Amazon EC2 instances and Amazon Elastic Block Store (EBS) volumes to ensure data continuity and quick recovery.

This modernization transformed our client’s data environment, improving performance and uptime.

Maximize Efficiency with Cloudelligent’s Data Modernization and Analytics Solutions

Large-scale data modernization presents numerous challenges, from managing massive data growth to ensuring security and compliance. At Cloudelligent, we use AWS services to take our Data Modernization Solutions to the next level, giving you the expertise and tools you need to tackle challenges head-on. Whether it’s optimizing storage, ensuring real-time access, or enhancing data security, our tailored approach empowers your organization to modernize data seamlessly and securely.

Book a Free Data Acceleration Assessment with Cloudelligent’s experts today to transform your data strategy and fuel business growth!