The rise of Generative AI applications has been nothing short of extraordinary. In just a few years, we’ve seen them transform industries—from helping doctors diagnose faster to letting retailers personalize your shopping experience before you even realize what you need. AI isn’t just some futuristic buzzword anymore; it’s here, it’s real, and it’s embedded deep into the heart of modern business strategies.

But as exciting as this journey is, there’s a catch: as businesses sprint to embrace Generative AI applications, they’re also opening the door to new kinds of risks. Data security and privacy can’t be an afterthought. They need to be front and center if we want to harness AI’s full potential without inviting disaster.

In this blog, we’ll explore why securing generative AI applications is crucial and the evolving risks they bring. We’ll also cover the best practices based on AWS’s latest frameworks to protect your sensitive data without hindering innovation.

Data Security Challenges in Generative AI

As exciting as Generative AI applications are, they also come with a brand-new set of challenges that traditional systems simply didn’t have to deal with. These aren’t just minor bumps in the road. They are serious hurdles that can compromise customer trust, regulatory compliance, and even your brand’s reputation if not addressed properly. Let’s dive into what makes securing Generative AI applications particularly tricky and why a proactive, thoughtful approach to data security is more important now than ever before.

Unlike traditional machine learning, which relies on carefully labeled data and predictable outputs, Generative AI applications deal with vast, often unstructured datasets. Basically, everything from customer logs to proprietary research. These models don’t just analyze data; they create new content, blurring the lines between training data and output.

The nature of Generative AI, where models create new content based on vast datasets, introduces unique security risks. For instance, sensitive information might surface during model interactions (inference risks), not just during initial training. Consequently, prompt injection mitigation becomes critical, as malicious actors could craft prompts that coax confidential information from the model, even if that data was meant to remain hidden.

Real-world examples have already emerged. A Reuters investigation reported cases where sensitive legal data, submitted to a public LLM without proper safeguards, leaked into outputs seen by unauthorized users. This demonstrates the urgent need to treat AI model security as seriously as network security.

Type of Data Security Risks in Generative AI Workloads

1. Data Exposure Risks

This happens when sensitive information such as customer records, financial data, or health data accidentally becomes part of what the AI model “remembers” or uses to generate responses. Imagine if you trained your AI assistant with internal legal documents or patient records but didn’t put strict boundaries on which data gets exposed during interaction.

The risk? Someone could ask an innocent-sounding question and receive a confidential answer they were never meant to see. Without careful fine-tuning, Generative AI applications can inadvertently “leak” internal secrets into everyday conversations. It’s like leaving a private diary open in a public park; you didn’t mean to, but the information is still out there.

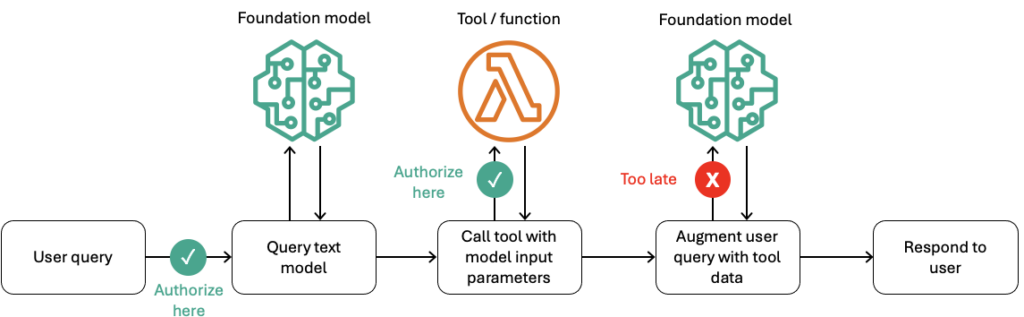

Figure 1: Example flow when a user makes a query that uses a tool or function with an LLM (aws.amazon.com)

2. Authorization Gaps

LLMs (Large Language Models) are brilliant at creating text, images, or code, but they’re completely blind when it comes to who should see what. They don’t understand permissions or access controls the way databases or secured apps do.

For example, your customer support chatbot built on an LLM might generate detailed invoices or internal company reports just because someone phrased their query cleverly. Unless you integrate access control in AI systems at the application level, the model can’t distinguish between a regular user and an admin. Imagine giving every visitor to a hotel the master key; the model opens doors without checking if the guest should be allowed in.

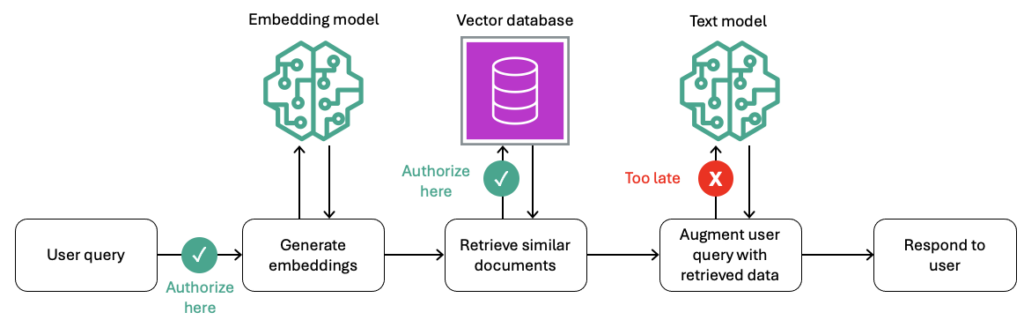

Figure 2: Authorize data access to the vector database on request, not data leaving an LLM (aws.amazon.com)

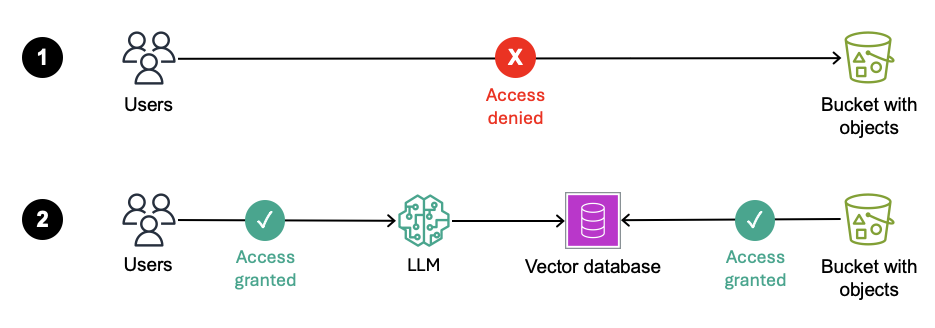

3. Confused Deputy Problem

This is a classic security issue, and it gets even trickier with Generative AI applications.

Think of it this way: you wouldn’t allow someone off the street to walk into your company’s vault. But what if they send in a friendly-looking robot that you authorized? That robot (your AI) could unintentionally fetch highly sensitive data, thinking it’s doing the right thing, because it wasn’t properly instructed to verify who asked.

In real terms, a user who can’t directly access your Amazon S3 buckets might use an AI-powered app you built to indirectly retrieve confidential documents from those buckets. The app is authorized, not the user. This is a case of a classic “confused deputy”.

Without strong rules about who the AI can fetch information for, you risk turning your secure systems into open windows.

Figure 3: Access is denied to users who go straight to the S3 bucket. But access is granted to users who access the LLM (aws.amazon.com)

Best Practices for Robust Data Authorization in Generative AI Applications

Securing Generative AI applications requires more than just reacting after something goes wrong. Think of locking your front door before you leave, not after someone sneaks in. As AI becomes more powerful, smarter ways are needed to protect the sensitive information it handles. Building strong data authorization from the start is not just a nice-to-have; it is essential. This approach ensures we can enjoy the benefits of AI without putting privacy, trust, and security at risk. So, let’s break down the simple steps you can take to stay ahead of the risks and design AI systems that are secure from day one.

1. Data Governance as the First Line of Defense

Before you can protect data, you need to know exactly what you have.

- Data Curation: Use services like AWS Glue, Amazon DataZone, and AWS Lake Formation to catalog, label, and classify your data. Know where sensitive data lives.

- Segmentation: Don’t mix public, internal, and confidential datasets. Assign clear boundaries. Segment access by team, project, or compliance need.

By establishing rigorous governance, you not only protect data. You also make compliance with HIPAA, GDPR, and other regulations a lot smoother. Solid governance is the backbone of AI compliance and governance.

2. AWS Security Frameworks and Tools for Generative AI

AWS provides powerful frameworks and tools to help you structure and strengthen your security posture for Generative AI applications. These resources are designed to not only protect sensitive data but also to help you build AI systems that are resilient, compliant, and trustworthy from the ground up. Here are some of the key frameworks and tools you should know about:

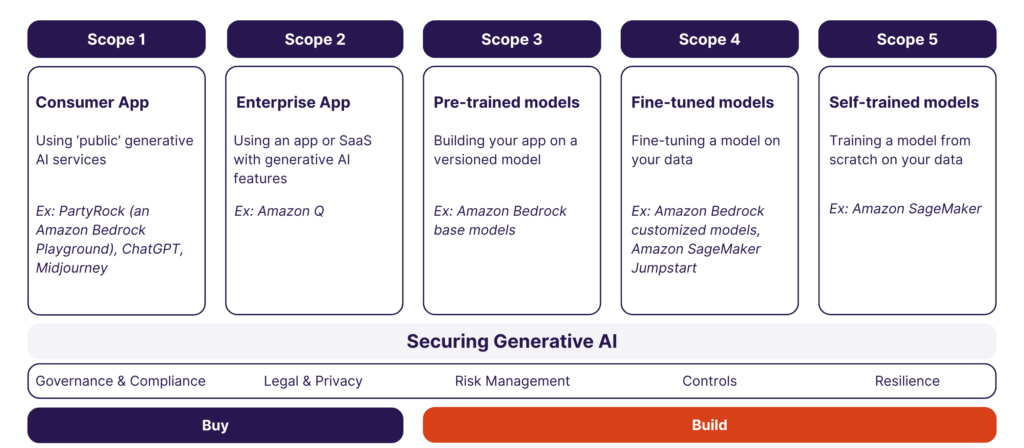

AWS Generative AI Security Scoping Matrix

Figure 4: AWS Generative AI Scoping Matrix

This matrix helps you ask the right questions at every phase, from the moment you first collect data to when you prompt the model and when agents interact with external systems. Instead of treating security like a one-time task, this matrix guides you to build protection into every layer of your AI stack right from the start.

Example: It’ll prompt you to think, “Have you validated input prompts for security? Are agent actions fully authorized?”

Amazon Bedrock Guardrails

Imagine if you had a smart security filter sitting between your users and the AI application.

Amazon Bedrock Guardrails are exactly that: they monitor the output generated by the LLM and block unsafe, biased, harmful, or non-compliant content before it ever reaches the user.

They’re not about “censorship”, they’re about making sure your AI responses meet your company’s safety, ethics, and legal standards.

Example: If someone tries to use your AI to generate hate speech, Amazon Bedrock Guardrails will catch and block it before any damage is done.

Amazon Bedrock Agents and Session Attributes

This is AWS’s clever solution for solving one of the biggest AI problems: “Who is actually making this request?”

Session attributes act like a sealed envelope containing the real identity and permissions of the user.

When an AI agent needs to fetch data or take action, it refers to the session attributes, not just the prompt, to know what the user is allowed to do.

Example: Even if someone tricks the AI prompt into pretending to be a doctor, the session attributes will reveal they’re just a receptionist and would block unauthorized data access.

Metadata Filtering in Amazon Bedrock Knowledge Bases

Suppose you have thousands of internal documents, some meant only for HR, some for Finance, and others for all staff.

Instead of storing these separately, you can add “tags” (metadata) to each document.

When someone queries the AI, metadata filtering ensures they only get results they’re authorized to see, based on those tags.

Example: An HR employee searching for “salary policy” would only retrieve HR documents, not sensitive Finance projections.

This keeps sensitive information compartmentalized automatically, even as your knowledge base grows. These layers create a zero-trust architecture in AI, where no request is automatically trusted.

Want a deeper dive into securing your AI workflows with Amazon Bedrock? Check out our blog on Harnessing Amazon Bedrock Security for Generative AI Applications.

3. Implementing Defense-in-Depth Strategies for Generative AI

Security isn’t one magic bullet; it’s a layered strategy.

- Align with OWASP Top 10 for LLMs: Particularly “Excessive Agency,” where AI models are given too much authority, and “Improper Output Handling,” where dangerous responses leak.

- Separation of Duties: Make sure the components managing prompts, tools, and data are distinct.

- Prompt Sanitization: Sanitize inputs and outputs to prevent cross-prompt contamination.

- Function Sandboxing: API calls initiated by LLMs should occur in hardened, sandboxed environments to prevent wider system compromise.

Collectively, these techniques underpin confidential computing in AI environments, where sensitive workloads are isolated and protected.

Curious about how to build secure Generative AI applications with Amazon Bedrock Agents? Explore the 9 Key Strategies on best practices and tools to ensure your AI systems are both powerful and secure.

4. Secure Model Training and Data Integration

When it comes to model training, ensuring the security of both your data and the model itself is paramount. Securing your AI models starts with the data you use for training, fine-tuning, and inference. It’s essential to handle sensitive data with the utmost care to prevent leaks and unauthorized access. Here are a few key practices to help you maintain a secure AI development lifecycle:

- Sensitive Data Hygiene: Only include absolutely necessary data in model training. Assume anything used in fine-tuning could leak.

- Metadata Filtering for Inference: If you use Retrieval-Augmented Generation (RAG) pipelines, metadata tagging ensures only appropriate documents augment the LLM’s prompt.

Amazon Bedrock Knowledge Bases make it easy to align AI data encryption and authorization policies, even across vast document libraries.

5. Monitoring, Guardrails, and Incident Response

Developing a secure generative AI system doesn’t end with deployment. Ongoing vigilance is crucial for maintaining security and performance. Without continuous monitoring and effective guardrails in place, even the most robust systems can become vulnerable to emerging threats. In addition, having a clear incident response plan ensures that you are prepared for any unexpected security events.

- Monitoring: Implement real-time alerting for suspicious patterns: bulk retrieval, anomalous queries, or repeated edge-case explorations.

- Guardrails vs. Authorization: Remember—guardrails protect against bad content but don’t validate “who” should access “what.” Real authorization must happen at the application layer.

- Incident Response: Document your breach response protocols. Test them. Simulate insider threat scenarios. Rapid response can limit fallout dramatically.

Resilient Generative AI applications don’t just run well—they fail safely.

6. Compliance and Regulatory Alignment

You can’t afford to treat compliance as a mere checkbox or afterthought. For Generative AI applications, this means going beyond basic compliance and embedding it into every part of your workflow, from development to deployment. Your approach must be thorough, consistent, and aligned with industry standards. It is important to:

- Honor Regulations: Structure data access and usage according to GDPR, HIPAA, CCPA, and other frameworks.

- Audit Everything: Enable AWS CloudTrail, detailed IAM policies, and session logs.

With these practices, your AI deployments won’t just be innovative—they’ll also be defensible in an audit.

The transformative power of Generative AI applications comes with a heavy responsibility: protecting sensitive data at every stage. From proper data governance to defense-in-depth security architectures, from real-time monitoring to meticulous compliance. It all matters!

By embedding Generative AI security best practices from day one, you future-proof your organization’s innovation, build trust with customers, and avoid the costly consequences of data breaches.

As we step further into the age of AI, let’s ensure that security isn’t just a bolt-on feature but a foundational principle. Safe AI is smart AI.

Keep Your Data Secure and Your AI Smarter with Cloudelligent

At Cloudelligent, we know that securing your data in Generative AI starts with implementing effective authorization mechanisms. Our AWS-native solutions are built to enforce fine-grained access controls, mitigate unauthorized access, and ensure your Gen AI applications remain secure, compliant, and ready for large-scale, real-world deployment.

From orchestrating secure workflows with Amazon Bedrock Agents to building RAG pipelines with built-in access controls, we help you embed security into every stage of your AI lifecycle.

Ready to strengthen your data security in Generative AI applications? Schedule your Free Cloud AI and Machine Learning Consultation with Cloudelligent today and start building secure, data-driven AI solutions with confidence.